I started on simple starfields and I had a working solution in less than an hour by simply drawing star sprites in random positions.

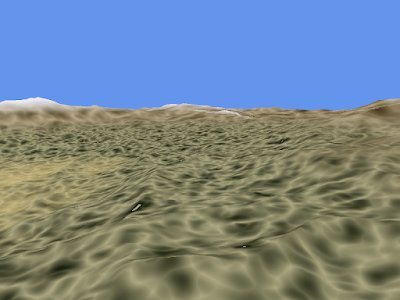

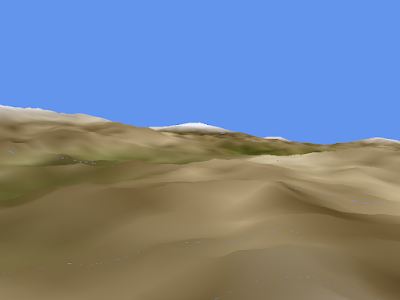

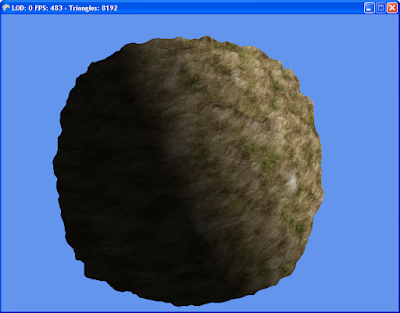

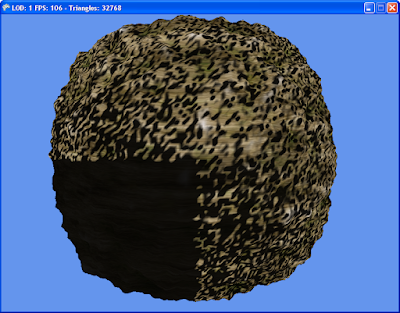

This yields the following result:

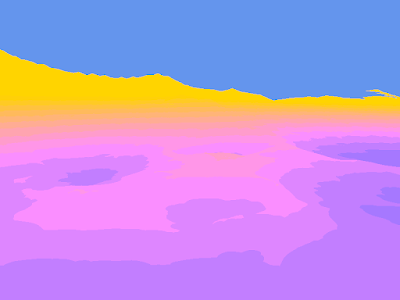

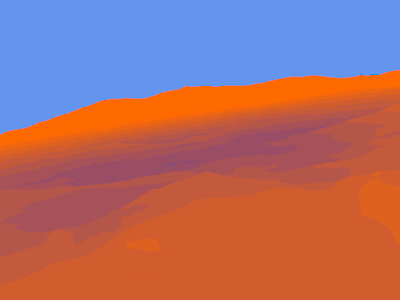

Next, in order to make the stars more interesting, I looked into implementing nebula clouds. I knew that some summation of Perlin Noise would be the best solution. So, I decided to implement a Perlin Noise function in C#. I have a really fast implementation in HLSL, but I wanted the full power of breakpoints and variable watches. I ended up using a standard fBm summation with an exponential filter applied to it.

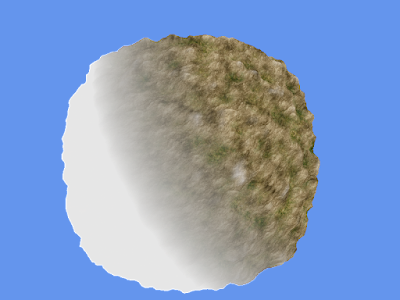

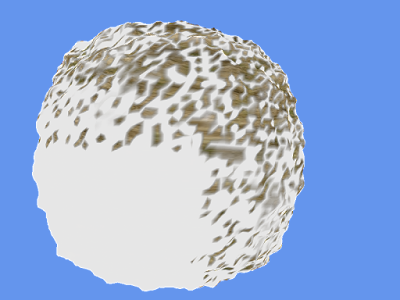

This helped to produce this nebula cloud image:

I still need to blend these two results together and construct them into a decent cubemap.

World of Warcraft + 360 Controller

All of that work done on the starfields and nebulas was done a couple weeks ago. My co-workers recently pressured me into playing World of Warcraft again. (About 6 months ago, I got up to level 18, and then quit.) As I was playing I kept thinking about how bad the controls were. I was moving my hands all over the keyboard to use all of my skills. It was fine for just grinding along and completing quests, but grouping and duels I always performed horribly. I desparately wanted to play with a game controller, however World of Warcraft didn't support them. There were a couple of applications that other people had written to allow gamepads, but I didn't care for them either. One was very ackward and built up macro commands that you had to then "submit" by pulling the right trigger. Another one seemed decent, but it had a subscription fee of $20 a year.

I decided that if I wanted something done right, I had to do it myself. So, I threw together a quick XNA application that read the 360 controller input. It would then use the Win32 function SendInput to place keyboard and mouse input events into the Windows input queue. I mapped out all of the controls and I was then able to play World of Warcraft using a 360 controller! I can target enemies, target allies, use all 12 skills on the current action bar, switch action bars, loot corpses, and even move the camera with full analog control using the 360 controller! It has completely changed the game for me and I even won my first duel last night.

I have now taken the app and added in mappings for Call of Duty 4 for my brother. I'm planning on converting the project to be more generalized so that the input mapping can be defined in XML and easily changed without recompiling.